幸运飞行艇168官网开奖查询结果-新飞艇结果记录数据官网直播-开奖历史官方记录 Simplify how you work

Secure collaboration with anyone, anywhere, on any device

168的幸运行飞艇开奖官网查询结果 security

飞行艇历史开奖 168飞艇官方开奖历史记录 新168飞艇直播结果 your sensitive files is a top priority. That's why we bring you advanced security controls, intelligent threat detection, and complete information governance. But since your needs don’t stop there, we also offer strict data privacy, data residency, and industry compliance.

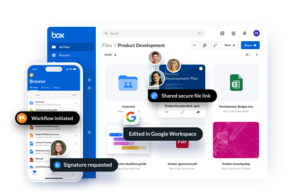

Seamless collaboration

Your business depends on collaboration between lots of people, from teams and customers to partners and vendors. The Content Cloud gives everyone one place to work together on your most important content — and you get peace of mind that it's all secure.

Powerful e-signatures

Sales contracts, offer letters, vendor agreements: Content like this is at the heart of business processes, and more and more processes are going digital. With Box Sign, natively integrated e-signatures included in your Box plan, you get a cost-effective way to power your business.

官方开奖网站结果、飞艇168视频开奖官网直播 workflow

Manual, cumbersome processes waste hours each day. So 直播官网168飞艇 视频168飞艇开奖官网直播 let anyone automate the repeatable workflows that are key to your business, like HR onboarding and contract management. Workflows move faster, and you focus on what matters most. It's a win-win.

1,500+ app integrations

With the Content Cloud, you get a single, secure platform for all your content — no matter where it’s created, accessed, shared, or saved. More than 1,500 seamless integrations mean teams can work the way they want without sacrificing security or manageability.

新幸运飞行艇开奖查询结果-免费查询开奖结果+开奖记录历史 AI to work, securely

Bring the best of 全国开奖官网直播 幸运168飞艇官网开奖记录计划 content management to your data, and empower teams with insights that boost productivity. Get answers from your largest documents, create content in seconds, and make mission-critical decisions faster. And do it all while maintaining Box’s enterprise-grade security, compliance, and privacy standards.

Open 幸运飞飞艇官网开奖历史记录

下载最新版开奖app软件手机版, first-party SDKs, integrated developer tools, and rich documentation, you can customize and extend Box to suit your business needs. Automate key workloads, customize your Box experience, and securely connect your business apps.

Content migration

Accelerate your move to the Content Cloud with Box Shuttle, our market-leading content migration tool. It’s fast, easy to use, and cost-effective. Best of all, it’s built with the full power and security of Box, so you can get more from your content.

Admin controls

User management is simple with Box. Intelligent monitoring and reporting tools give you a bird’s-eye view of how content is being shared and accessed across your organization. You get full visibility and control, and your teams get more done.